Michael M. Santiago/Getty Images

Since the public release of ChatGPT in November 2022, Google has been struggling to adapt to a new world of generative AI, despite developing much of the technology behind it. The company’s recent responses to AI content are already changing how you browse the web and may alter the English language itself.

First, let’s explain how the online content business works. Before the web, almost everyone subscribed to a newspaper, and if you could get your content into one of those newspapers, someone was guaranteed to read it, because the newspaper had a built-in audience. The trick was convincing a newspaper editor to grant you access, and if you couldn’t do that, no one would read what you wrote.

Now, there are no gatekeepers, at least in theory. Instead of a few dozen newspapers, we have millions upon millions of websites. The problem now isn’t getting past a gatekeeper but trying to stand out from the noise. Some destination sites, like Blaze Media, still have a built-in audience. But for the vast majority of independent writers, your best bet is search engine optimization.

SEO is essentially a big bag of tricks writers develop so that their content shows up in Google Search results, ideally on the first page of a search result and even more ideally at the very top of the page. SEO has a bit of a nasty reputation in some circles, largely thanks to ugly “black hat” techniques to fool Google, such as keyword stuffing, where a writer peppers a post with the targeted search term. You’ve probably seen things like this in the past:

Are you looking for the best wireless headphones? Read on for our guide to the best wireless headphones. We’ve assembled a list of the best wireless headphones that anyone shopping for best wireless headphones can appreciate. We have wireless headphones in every price range for all of your wireless headphone needs.

You usually don’t see as much of that now, because Google’s algorithm will punish a post that overdoes keyword stuffing. However, it will always be a somewhat viable technique because Google must programmatically analyze billions of websites and determine what to rank in search results, with the goal being to please the end user. And one of the ways a computer program can analyze those websites is by analyzing which keywords it hits.

Google wants you to think that the trick to your content showing up in search results — and thus being read — is simply to write good content. I wish that were the case, but without any sort of SEO, chances are whatever you publish will fall by the wayside. If you hate SEO, blame Google, which practically owns the web and sets the rules, not the people trying to eke out a living from it.

When ChatGPT hit the scene, SEOs quickly discovered that it was really good at SEO. You go to ChatGPT, tell it the keyword you’re targeting, and it would automatically write a decent blog post that would quickly rank on Google. This only makes sense, because GPT learns about language the same way Google does: by automatically skimming the web, scooping up data from websites, and using it to teach the algorithm. It was almost as if GPT were created for SEO, because it’s designed to interpret the intent of your request and produce content that satisfies it, which is the same aim as Google.

This was clearly a threat to Google’s massive search business. By and large, people don't want to read AI-generated content, no matter how accurate it is. But the trouble for Google is that it can’t reliably detect and filter AI-generated content. I’ve used several AI detection apps, and they are 50% accurate at best. Google’s brain trust can probably do a much better job, but even then, it’s computationally expensive, and even the mighty Google can’t analyze every single page on the web, so the company must find workarounds.

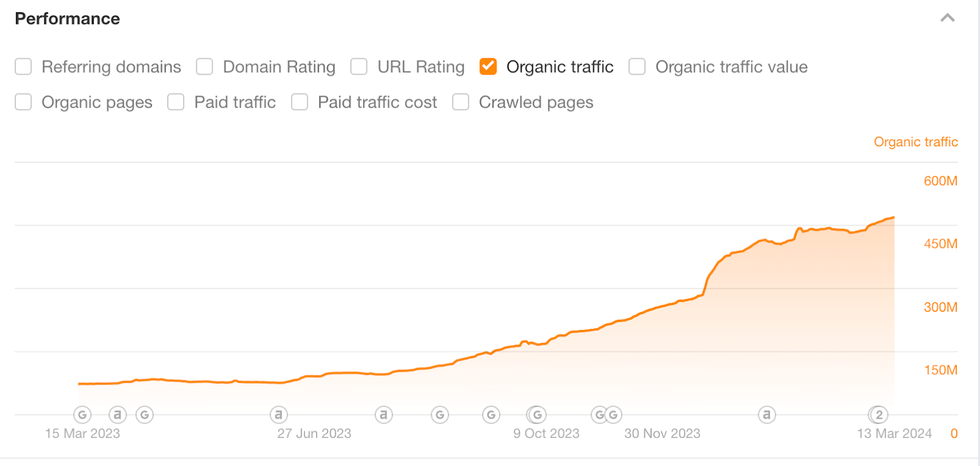

This past fall, Google rolled out its Helpful Content Update, in which Google started to strongly emphasize sites based on user-generated content in search results, such as forums. The site that received the most notable boost in search rankings was Reddit. Meanwhile, many independent bloggers saw their traffic crash, whether or not they used AI.

Google’s logic is pretty simple: Give more weight to forum sites like Reddit and Quora, where content is moderated by human beings who, in theory, will remove AI spam. Google is essentially offloading the task of AI detection to forum moderators, who are often unpaid, and it’s computationally cheap for Google to do so.

Funny enough, SEOs are now spamming these sites to game Google search, and interestingly enough, Google recently signed a deal with Reddit to acquire its user data to train Gemini, Google’s own AI project.

But that update just wasn’t enough to dissuade AI-powered SEO. Google has a strong distaste for SEOs who try to game their system, especially the ones who make them look foolish. And boy, have GPT-wielding SEOs made them look foolish. Perhaps the most egregious example was the great SEO heist of late 2023.

On November 24, 2023, SEO Jake Ward announced on X that he had pulled off an “SEO heist” that stole 3.6 million views from a competitor. The competitor was Exceljet, a well-established site with tips about Microsoft Excel.

In the X thread, Jake explained how he pulled it off. First, he extracted Exceljet’s sitemap. A sitemap is a document that lists every page on a website, and most websites maintain one to make it easier for search engines like Google to crawl them, which in turn helps their content show up in search results. You can view Blaze News' sitemap for yourself.

Jake then took every URL on Exceljet’s website and extracted its “slug,” which is a descriptive part of a web address. So Jake took a list of articles like these:

And fed them into a tool called Byword.ai, which uses AI to mass-produce blog posts. For a relatively small fee, Jake produced thousands of articles per day replicating the same topics and structure as Exceljet. And because the AI-produced content appears “perfect” to a search engine, it readily outranked Exceljet.

But Jake made a huge mistake: bragging about it in public. Google soon de-indexed his site, which means it no longer appears in search results at all. He’s also deleted his X account.

The SEO heist was clearly Google’s last straw, and it’s now unveiled a much stronger search update to thwart AI.

Google recently unveiled a new update to its spam policies specifically targeting “low-quality, unoriginal results,” most specifically through its new “scaled content abuse” policy. In short, if you’re running a website built around mass-publishing AI-created articles, expect your traffic to soon go to zero, either through algorithmic changes or by a “manual action” by one of Google’s many contracted human reviewers.

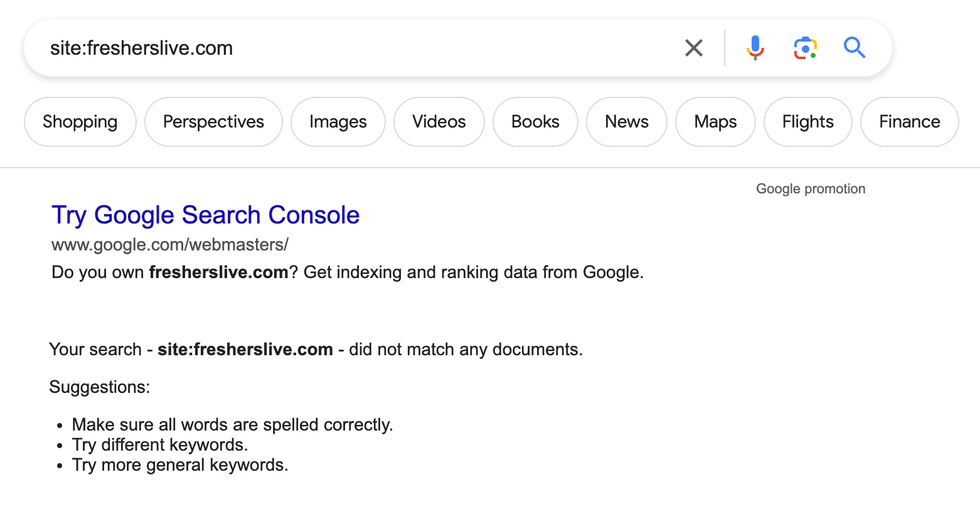

In fact, Google started issuing those manual actions almost immediately after the announcement, de-indexing hundreds of sites with little warning, like fresherslive.com and zacjohnson.com. You can check this yourself by going to Google and searching for site:domainname. If nothing shows up for the domain, it’s been removed from Google’s index.

So far, we don’t quite know what the algorithmic penalty will be for AI-produced sites or if there will even be one. It will take weeks for the update to roll out for every site in Google’s index. For now, Google is manually flagging the sites to remove from its index, and while there may be some innocent victims, most have content almost entirely created by AI.

AI paranoia is striking not only search engine land but social media as well. To be sure, Twitter and LinkedIn both are full of obviously GPT-generated responses. No matter how much prompt engineering you perform with GPT, it has a certain writing style that’s easy to spot once you know how to recognize it. The grammar is a bit too perfect. GPT likes long words and well-padded phrases. GPT doesn’t make spelling errors or typos. And as GPT and other generative AIs become more advanced, their content will become harder and harder to spot.

Already, I regularly see clearly human writers on social media being accused of generating their text with GPT because they use college-level words or whimsical phrases. Ironically, as generative AI’s output becomes more human, good human writers may be punished by algorithms or human moderators who suspect that their writing is a bit too good. Although I doubt Google has the capability today to scan billions of individual web pages for AI content, it’s a problem the company is undoubtedly working to solve. In fact, the future of Google’s search probably depends on it.

I happen to be trained as a blacksmith, and one of the things my teacher told me is never try to remove hammer marks and small imperfections from a piece, because that’s evidence that it was made by hand and not a machine, and that’s what the customer is paying a premium for.

Is it possible that not long into the future, writers will sart deliberately introducing misspellings, typos, and grammatical errors into their text just to leave behind little “hammer marks” that prove the content was produced by a genuine, bona fide human being? Will those deliberate misspellings and errors eventually be enshrined in the English language as they become normalized?

What if it’s our flaws and imperfections that ultimately make humans special?

Josh Centers