gremlin/Getty Images

“I’m Yul Brenner, and I’m dead now.” That was how comedian Bill Hicks only slightly misremembered the actor’s eerie, posthumous anti-smoking ad broadcast after his 1985 demise.

In 1973, Brenner played a killer android, not a human ghost, in sci-fi master Michael Crichton’s directorial debut, "Westworld." Much like his other franchise about a theme park gone wrong, "Westworld" concerned the radical proposition that building fake people was bad for real ones.

Though the movie got enough acclaim to spawn a sequel and a TV series, the spin-off “Beyond Westworld” got the axe from CBS three episodes in, even though five were produced. So far, for whatever reason, audiences had had enough. The ratings spelled doom, until, that is, “Westworld” was resurrected by HBO in 2016.

Much like its serially abused robo-slaves, once again the dystopian tale seized our imaginations. Audiences wanted more, and they got it: four seasons, with a fifth on the way.

Once again, something went wrong. Viewers soured on the series' ever more fruitless convolutions. What was groundbreaking a handful of years ago was heartlessly put in the ground last year, taking, it seems, Crichton’s prophetic warning with it.

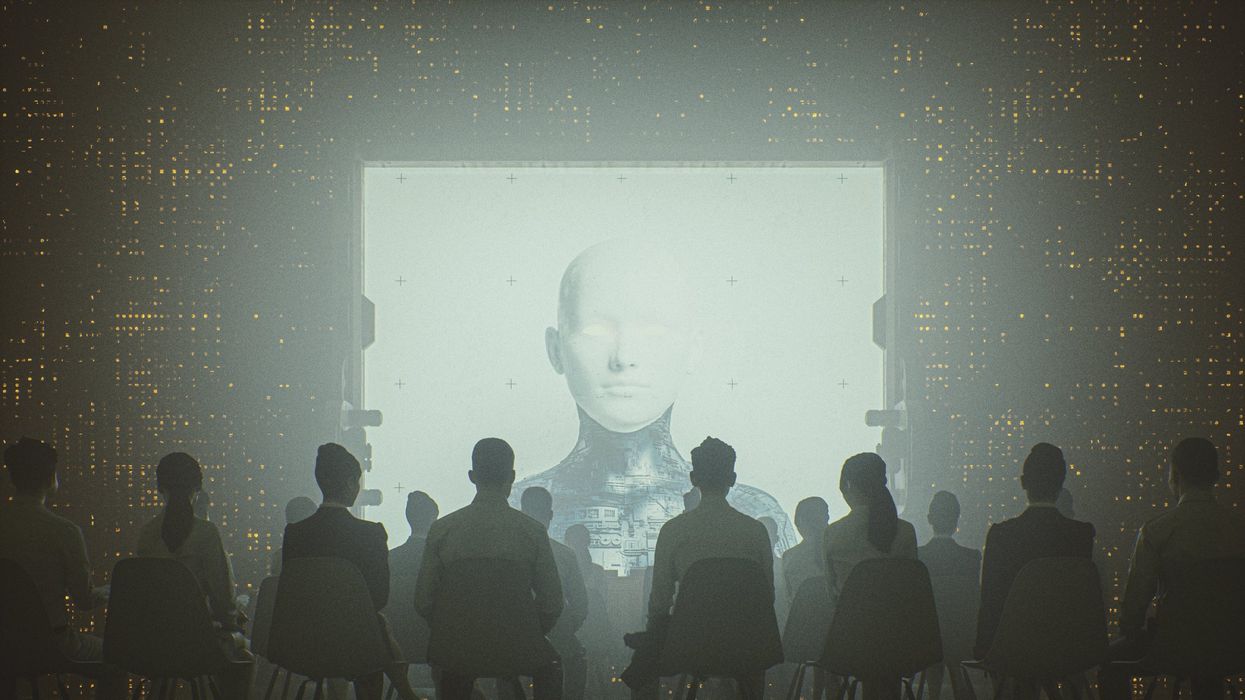

With breathtaking continuity, the specter of real-life “Westworld” has risen up to replace the storied show. No amount of positive spin on artificial intelligence has dispelled our understanding that the true promise of supertech is to make it ever more difficult for us to recognize who’s a human and who’s a machine.

Of course, some like it that way. Some are convinced that they’ve made their peace with this apparent inevitability. They seem to have forgotten Crichton’s lifelong counsel that the inevitable is the ultimate illusion. Forgotten like “Westworld’s” humanoid bots or the franchise’s own abrupt cancellations, today’s awe-inspiring innovations are tomorrow’s trash.

Given the choice, people often turn their backs on what it once seemed that they couldn’t let go, given the choice. But this time, the AI future we’re promised is one we can’t cancel.

In fact, according to a certain influential set of so-called AI “doomers,” the machines will soon cancel us human beings. Insider chatter on X.com circles around the meme that many AI leaders give our species coin-flip odds of surviving the advent of full-blown artificial intelligence. Common sense suggests that’s not a chance worth taking, but the strange thing about 50/50 odds is that they defy or even defeat risk analysis, leaving everyone with a “who’s to say?” vibe to go off of.

That’s why, judging from past performance, we ought to begin by recognizing that our fate and fortunes are always in doubt, always in our hands. Suicide is always one foolish action or evil decision away. And, in the same way, life is always there for the living – by way of spiritual discipline strong enough not only to stop suicidal acts before they start but to bend our wills toward responsibility. Notably, nobody’s freaking out about the prospect that Russia or China will kill the human race with their AIs. They’re worried about the West. Because here, we’ve demonstrated both the great fruits of freedom well utilized and the nightmarish consequences of its abuses. From that standpoint, AI is just another trial, just the latest – perhaps greatest – test.

That’s why it’s so important that we come down from the level of arguing abstractly about probabilities and scenarios and ensure that AI, like all technology, is as well-distributed as possible. While bug-eyed tech optimists like this approach because it causes them to geek out on their fantasies of everyone flying around like Iron Man, the truth is well-distributed digital technology is important because of the limits it helps cultivate against tech overreliance and overdevelopment.

First, and most obviously, when tech isn’t politically concentrated in the hands of a tiny few, governments and regimes have relatively less capability to wield it against their own people. When they make bad decisions or take bad actions, the consequences are mitigated by what the people can do to correct, mitigate, or avoid that behavior. Plus, technology organized at the local level can strengthen communities and enable groups with big disagreements to pursue their own choices at a sustainable human scale.

Yet, still more significantly, well-distributed tech helps ensure that spiritually disciplined people and institutions can wield authority over tech and its use and development. As important as it is to ensure that technology doesn’t transform America beyond recognition and our most fundamental rights and duties along with it, politics and law are not the only or best ways to control the role of technology in our lives.

But suppose technology and its development are concentrated in the hands of the regime alone. In that case, it will be hard for the spiritually disciplined to wield any authority over tech unless they take government jobs and get intimately involved in the dark side of political power. That’s especially dangerous in America, where flourishing spiritual life is a necessity and theocracy is forbidden.

Applying our best spiritual and political resources to ensure that tech is well distributed will give us our best shot at ensuring that today’s AI novelties don’t become an albatross in the future, whether a week, a year, a decade, or a century. So long as we preserve that all-important off switch, whether it’s AI or other advanced tech, we won’t need to pull the plug.

James Poulos

BlazeTV Host