Sunset Boulevard/getty

Part 1: What is it to be right-wing?

It’s an open secret that sci-fi is the genre of the left. Pick out any landmark title, and you’ll likely find the author fits into one or more of these three descriptors: progressivist, rationalist, and atheist. And while there are standout exceptions, for every Heinlein, there’s an Asimov and a Clarke. For every religious Walter M. Miller, there’s a dozen other sci-fi authors espousing various iterations of the revolution.

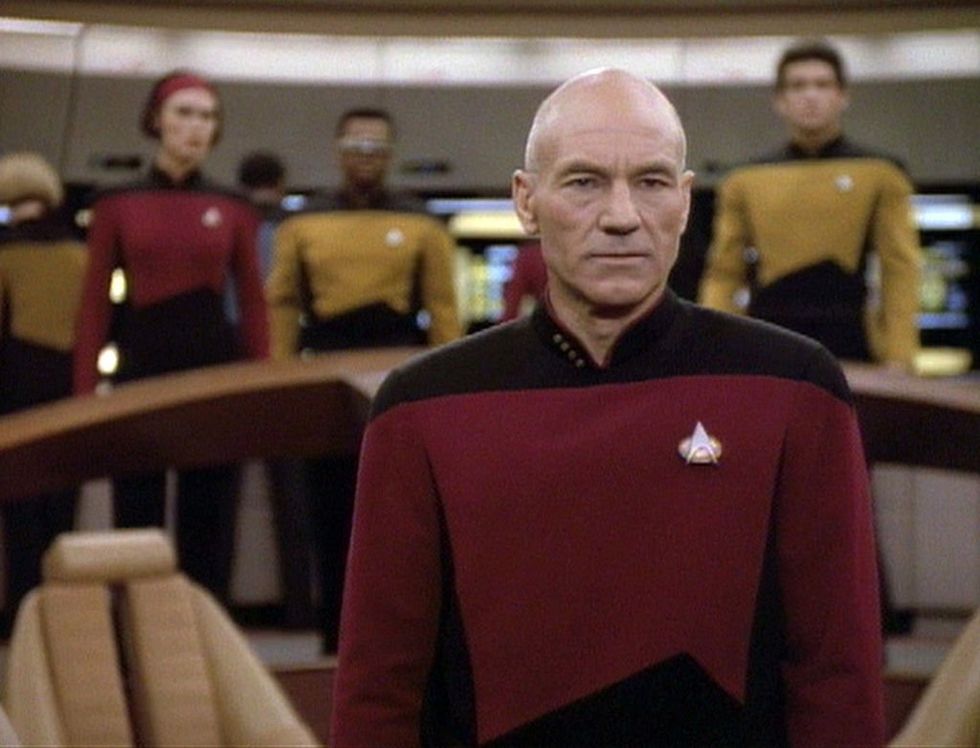

While I wouldn’t say science fiction is necessarily defined by this strain of leftist thought, it’s undeniable that the genre has served as a hotbed for progressive ideas since the early days of H.G. Wells and Jules Verne. Gene Roddenberry’s "Star Trek" wasn’t a step into a bold new frontier so much as it was advancing ideas that had long been coalescing among these writers. Ideas that, upon hitting the mainstream, calcified into the tropes that we now consider part and parcel of the genre. Uploading minds into computers, human evolution guided by a higher intelligence, the idea that the world can unify under a global government — they are all expressions of leftist ideas.

Are faster-than-light technologies truly a boon for humanity, or would their use unleash absolute chaos? What are the consequences of a genuinely abstracted consciousness? Is there a point where we say no to certain technologies because their costs are too great?

Take the first, for example: The left presumes that humans are nothing more than blank, abstract consciousnesses piloting meat sacks. Leftists see the mind as completely separate from the body and the body as irrelevant to the self-expression and identity of the mind. In today’s political terms, this is the “trans” movement. In sci-fi, this is taken to the nth degree, where the mind itself is often separated from the body into a computer to become an abstract self-identity.

But of course, this assumes the essence of a human mind is the electrical firing of neurons and not emergent in the physical reality of those neurons. The left believes the problem of sapience is one of sheer processing power, that self-awareness is merely translating neurons into ones and zeroes. But how many people would agree that a neuron and a computer program written to model a neuron are ontologically the same thing? Or to put it more simply, how many people would say Gary and the little black box running a computer model of Gary are indistinguishable in every way, shape, and form?

Science fiction is riddled with these often unquestioned assumptions. Or, when they are brought under scrutiny, they are shuffled off-camera to avoid the harder implications. As much as I love "Star Trek," I always groan in frustration when they bring up an interesting concept because I know it will only last as long as the episode. A duplicate of your entire crew created from a learning metallic liquid? A virus that rapidly ages its victims? A nonphysical entity mentally interrogating your officers? Welp, these things happen, I suppose. Nothing a vacation on the Holodeck wouldn’t fix.

It all raises the question of what, if so much of sci-fi is left-wing, might right-wing sci-fi look like? Anything more than a predictably ideological counter-spin on the conventions of the genre? Or might we find, somewhere, visionaries and luminaries capable of more? Might we create an artistic scene where new Frank Herberts are more a rule than an exception?

Well, the first problem we have before us is what even defines right-wing sci-fi. We need a definition or a set of criteria before we can say something is left-wing vs. right-wing. And I don’t mean right-wing in that cringe-inducing manner where conservatives are just dunking on the left. I mean genuine art that seeks after the true, the good, and the beautiful.

What I propose here is not the be-all and end-all. It’s more like the start of a conversation, a set of principles that can helpfully distinguish what is genuinely right-wing from what is subversive. I myself will probably modify these rules as time goes on. But definitionally speaking, I think these three criteria are essential. So, here are Isaac’s three laws of right-wing science fiction:

Let’s break down what I mean with each point. The first rule speaks to the beating heart of sci-fi, which is man’s relationship with technology. The left sees progress as an inherently good thing. All problems of the human condition are of information processing. If there is a problem facing society, it is either that we lack the required knowledge to solve it or there are inefficiencies in our processing speed.

Progress and technology are increasing both, so they must be fundamentally good. We can solve the famines in the third world with advanced agriculture and infrastructure. We can fix our economy with sound policy decisions from think tanks and academic experts. We can end all human suffering if we just automate everything and turn existence over to machines to do everything for us.

It never occurs to the left that technology often creates as many problems as it solves. Advanced infrastructure in the third world brings questions of how to maintain such infrastructure. Complex systems require ever more complex maintenance. And if the will and human resources aren’t there to do it, then it won’t be done.

Sound economic policy decisions assume objective actors who will act for the ultimate good. But no one is outside the system. Bureaucracy inevitably breeds more bureaucracy because bureaucrats need to make a livelihood. And no matter how much you try to optimize self-interest for the better of all, corruption is always more profitable.

None of this is to say that technology or advanced systems are inherently bad. I mean that technology should be viewed as it actually is — a series of trade-offs. Modern plumbing is undoubtedly a godsend, but there are still costs that come with it. We don’t think of its maintenance as costs because we are so inundated with its use, but those costs remain. A significant amount of time, money, and investment from someone are required to keep your plumbing functional.

And if you try to automate those things, the automation itself will require even more maintenance. The best solution isn’t always to double down on ever more complex systems until you eventually run out of energy to maintain them all.

The first rule posits a very simple ask of sci-fi authors — that technology isn’t necessarily a force for good in and of itself. If science fiction explores the realities of mankind’s relationship with technology, and if it is to undertake this task honestly, it must understand that more is not always better. Right-wing sci-fi must take a fuller approach to technology, dissecting a given technology from all angles, understanding its full implications, and weighing its use. And, if necessary, to reject its use outright when the trade-offs are not worth it.

Frank Herbert’s "Dune" is a landmark title partly because the author posits a world where certain technologies have been considered seriously and rejected. And then it dares to imagine the consequences of such a world.

How many plots of "Star Trek" are the exact opposite? How many episodes are spent in which the solution is some clever use of technology or some new knowledge to solve the problem? How many of the problems facing the characters are the lack of information and not the harder choices men are often called to make?

Moving on, the second rule is an extension of the first. If technology is not to uplift humanity, then it is merely an additive to humanity — a humanity that remains constant in its condition.

There’s no one serious in the world who contests that technology changes human behavior and the human experience. It obviously does so. But does technology change what it is to be human itself? Has technology changed the overall nature of mankind? Are we fundamentally different as a species than where we were 2,000 years ago? If you were to compare the two, would you find something essential in modern man that is not present in his earlier incarnation?

And if you were to strip modern man of his creature comforts, would he be any different than what he was 2,000 years ago? Would his children be any different? Would their grandchildren? If the answer is no, then we must conclude that it is the environment that has changed in the modern world, not the man himself. In this case, if our impossibly sophisticated world has not already made a measurable impact on human nature, why do we expect it to do so in the future?

It is easy to dream of machine life integrating with the organic as it often does in sci-fi. But has anyone ever stopped and asked what would happen if such a future were impossible? We assume that science will progress forever and forever, but what happens if we were to come across hard limits to our reality that could not be overcome? What if — dare I say — that more knowledge was not the solution to our problems?

What if reality itself has its limitations? And what if one of those limitations was the human condition?

The left has placed its bets on AI and trans-humanism, the latter concept being an oxymoron. To transcend the human means no longer being human, which means we’re talking about something completely different than humanity. Trans-humanism means the rejection of humanity in favor of something else — which is assuredly not human. If we were to put it in actual, real terms, trans-humanism is the abolition — or outright extinction — of the human race.

So, we are effectively placing all our bets on some unproven, utterly unknown entity, and we’re hoping that this entity is magically better than humanity itself. And if we were to create a self-learning AI somehow, there is no guarantee that such an AI would somehow be superior to mankind. Mere intelligence does not equate to morality, and I hope that’s obvious to anyone who’s seen his fair share of cheesy machine uprisings.

To be right-wing in science fiction is to make the simple observation that human nature seems to be sticking around for the long haul, and tampering with it does not guarantee a better world. If there is one single thing that conservatives have succeeded in conserving, it is human nature. And given the absolute failure of conservatives against the left, I think this is direct evidence that human nature is the one thing the left cannot abolish.

The third rule concludes the first two. If progress does not bring us to a better world and human nature is not malleable, then utopia is firmly out of our reach. So many of the left’s stories rely upon the implicit assumption that man is destined to achieve a perfect state of being in this universe, a post-scarcity society where all problems of existence are solved.

But what if mankind’s ultimate purpose is not to strive for infinite material gain? What is mankind if we are not a mere appetite that needs to be satiated, and what if existence entailed more than a constant string of dopamine hits?

This final point encapsulates a topic, the often-discussed but not properly explored religious aspect of sci-fi. In so many stories, the narrative’s true religion is some version of leftist progress, and all other faiths are an obstacle or interesting nuisance in the way of this goal. But imagine, for a moment, if another religion was true? What if different metaphysical claims underpinned a narrative?

This is a woefully unexplored part of sci-fi, and I think here is a fertile ground for new artists to break out of genre-defining clichés and exhausted plot lines. Warhammer 40k is interesting not because it reuses a bunch of sci-fi tropes but because it puts a new spin on them. In the setting, the dream of "Star Trek" is inverted. Instead of peace and prosperity, the galaxy is thrown into endless war and strife. Everything is collapsing, and the idea of a secular utopia has been thoroughly snuffed out.

The best parts of 40k are when the authors try to salvage meaning and hope out of this bleak situation. And what do those authors find themselves turning to time and again for new answers? Religion.

The message I want people to take away from this essay is not me crossing out tropes with a red marker and saying you cannot use them or else you are a leftist. But rather, questioning old tropes that seem exhausted and finding new spins or interpretations on them. Are faster-than-light technologies truly a boon for humanity, or would their use unleash absolute chaos? What are the consequences of a genuinely abstracted consciousness? Is there a point where we say no to certain technologies because their costs are too great?

This is not an exercise in condemning past authors or their works, only trying to understand the progression of ideas in the genre and where those ideas are leading. And finally, to leave it up for future artists to decide if they want to venture somewhere else in the bold new frontiers of their own sci-fi stories.

- YouTube youtu.be

Isaac Young