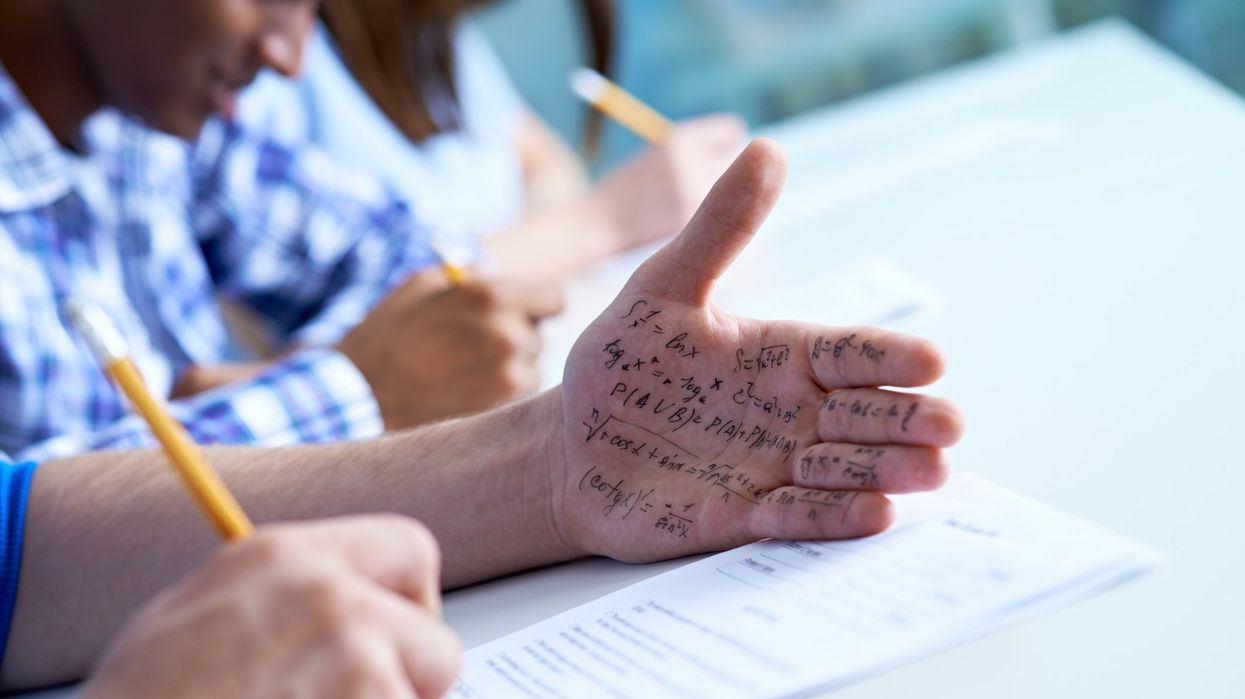

mediaphotos/Getty Images

AI is a powerful tool that can be used to write papers and complete homework, but professors and students say the reality is complicated as adoption of this new technology spreads.

“AI is coming for your job!”

That’s a sentiment shared by many across the world. As AI technology grows more advanced, many are worried about Gen Z’s future in the job market. However, AI already affects Gen Z’s workforce training, also known as college.

Surveys show that between 30% and 89% of college students have used ChatGPT on assignments at least once, which worries most college professors.

Nowadays, teens are watching hundreds of 30-second TikTok videos, scrolling aimlessly on X, and, worst of all, watching porn rather than consuming content that trains the brain to think critically.

“I have mixed thoughts about college students using AI and Chatbot for their assignments,” said Yao-Yu Chih, a Texas State University finance and economics professor. “I recognize the potential of ChatGPT to enhance learning by providing quick access to information, but I am concerned,” he added, “about the risk of academic dishonesty and students relying too heavily on AI, which may hinder their true understanding of the subject matter.”

Justin Blessinger, director of the AdapT Lab at Madison Cyber Labs and a professor of English at Dakota State University, also spoke to Blaze News about his concerns with ChatGPT. “I hear from many professed experts that AI is 'no different' than the internet was, or Google, or autocorrect, and will be 'disruptive' in the same fashion, where the Luddites will eventually quiet down or die off and the enlightened apparatchiks will prevail,” he said.

“But it's not. Not remotely,” Blessinger added. “AI is not the internet. It absolutely replaces thinking for a great many students.”

During my first year at the University of Texas at Austin, many of my professors’ syllabi included an “academic dishonesty” section that prohibited the use of ChatGPT. For example, in my computer science coding course, the professor told students they would either have to drop the class, get an F, and/or be reported to the dean of students' office if they used ChatGPT.

“Code written by an automated system such as ChatGPT is not your own effort. Don't even think about turning in such work as your own, or even using it as a basis for your work. We have very sophisticated tools to find such cheating, and we use them routinely,” the syllabus said.

Some college students are concerned too. Over half of college students consider using ChatGPT to be cheating. In a conversation with Blaze News, a second-year government major said, “I have never used artificial intelligence in college because I think that AI hinders academic creativity and growth.” He argued that AI may hinder students’ creative abilities, “stop them from thinking for themselves,” and “make them more inclined to copy and implement ChatGPT’s writing style and ideas for their own writing.”

In my experience, most students use AI moderately by checking over work they have already completed or by asking it to perform simple tasks, like “using it as a grammar checker on papers,” as a fourth-year kinesiology student told Blaze News. "English is not my first language, and using it professionally still proves to be a challenge for me sometimes,” he added.

A minority aren’t too concerned with AI abuse and use it extensively to bypass monotonous tasks. After all, most college English professors assign essays with prompts related to social justice, America’s racist history, or some other left-wing idea.

“I’ve been using [ChatGPT] ever since I heard about it during my senior year of high school,” said a second-year finance student in conversation with Blaze News. For some of his essays, he said he inputs prompts into ChatGPT and “takes whatever [ChatGPT] gives me and sends it through a paraphrasing tool website since it changes up the writing a little bit” to evade the professor’s AI checker.

Most college students, including myself, believe ChatGPT is useful for simple tasks or acting as a search engine but is incompetent at completing complicated homework problems like finding solutions for multivariable calculus or linear algebra assignments. But weirdly enough, ChatGPT is proficient in explaining complex math ideas conceptually despite being unable to actually produce the correct numerical solution.

A second-year computer science student told Blaze News he “uses AI quite a bit" in his "day-to-day college work.” He continued, saying, “I’ll use it to get ideas or help get rid of a writer’s block. For essays, it’s helpful to use ChatGPT to find synonyms and rewrite a few sentences to make my writing stronger. But I’ve never used it for math. It doesn’t seem too capable in my experience. I’ve tested it out for coding assignments a few times, but it doesn’t seem capable either.”

When defending their ChatGPT use on assignments, students often mention that they will encounter AI in their future workplaces, so they should be able to use it in their college work. They argue that teachers should embrace new technology and implement liberal ChatGPT policies.

However, over-reliance on ChatGPT may lead to a “potential hazard,” John Symons, professor of philosophy at the University of Kansas and founding director of the Center for Cyber-Social Dynamics, warned. Dr. Symons told Blaze News he “think[s] it's really important that people gain some acquaintance with the technology.” However, “I think,” Dr. Symons continued, “what would be most useful for young people is to understand the technology, not just be passive consumers of the device. So I think understanding the foundations of the technology, like how it works, is probably more valuable for their futures rather than being passive consumers of generative AI.”

Furthermore, increasing ChatGPT use by college students exacerbates their already incompetent reading and writing abilities. Reading closely and analyzing texts teaches students to form ideas and arguments, and writing allows students to slow down in their hectic lives and effectively communicate those ideas and arguments.

“The purpose of college writing has always been to teach students to analyze and think critically. You review what's been written about a topic; you form an opinion of your own; you express that opinion while gesturing toward the best evidence you discovered. You make changes based on what you know or assume about your audience,” Dr. Blessinger told Blaze News. But “using AI writing without first learning to research, argue, and write without [ChatGPT],” he warned, “is lunacy.”

Nowadays, teens are watching hundreds of 30-second TikTok videos, scrolling aimlessly on X, and, worst of all, watching porn rather than consuming content that trains the brain to think critically. It’s much easier to watch a five-minute PragerU video or two-sentence tweet explaining what it means to be a conservative rather than spend a couple of hours reading Russell Kirk’s "The Conservative Mind." It is no surprise that students don’t know how to read and write any more.

In conversation with Blaze News, Jonathan Askonas, assistant professor of politics at the Catholic University of America, argues that “high school students have been basically post-literate for at least the last five years.”

“I don't think [ChatGPT’s] primary effect so far has not necessarily been to damage students' ability to think, read and write, as much as it has acted as a crutch for students who already struggled that were already poorly prepared for college. And then inevitably it also prevents them from growing, or it damages their ability to grow in those areas,” Askonas said. He also added that since students’ reading and writing skills are waning, “the effects [of AI] so far have been an improvement in students’ work.”

Teachers and professors will have to adapt to new technological developments. If teachers begin to design more personalized assignments, as opposed to a “one-size-fits-all” education model, students who use ChatGPT as a crutch may be forced to grow in their literacy. Dr. Symons told Blaze News:

I think the model for education is going to have to change. We're gonna have to move away from an industrial model of education towards a much more artisanal, personalized model of education where AI can certainly help, but the focus will be on discussion, oral exams, in-class writing assignments, and close reading. ... What happens in the classroom will have to be much more focused on students, on individual skills, and the quality of the reading or the quality of reading skills will have to be the focus. ... I think students will recognize the difference between that kind of personalized or artisanal education and the kind of mass-produced industrial education that they might get through an online course or through a large lecture.

But in my experience, classes are increasingly mass-produced and offered online, likely because of long COVID laziness. In high school, I took a combination of in-person and online courses so I could go home and eat lunch after my midday basketball practice, even though the same online courses were offered in person by better teachers. Some teachers showed videos they recorded during COVID, while others just left students to learn from an e-textbook. During my first year of college, I took two online courses to make room for internships and extracurriculars. Each had around a thousand students, and one of them showed pre-recorded lectures from a couple of years ago.

However, once professors decide to shift away from mass-produced education, expectations will begin to change, and workplaces will rethink their view of what’s valuable. While some believe humanities degrees and jobs, like journalism, may become obsolete and useless because of AI, Dr. Askonas argues that the humanities might become more “scarce and therefore valuable” due to AI.

[AI] changes what we expect of our students. It changes where they're weak, and hopefully it changes what [professors] think that they need. So for instance, many college curriculums assume essentially illiterate college students. It’s not because of AI. ... So that means thinking about how you are going to teach attention. How do you teach careful reading? How do you teach? How do you teach students to be self-conscious about the effects that technology has on their own abilities? It's going to change what's valuable.

So instead of students being expected to be able to use generative AI in their workplace as it changes, you have this question of what remains scarce and therefore valuable. A certain level of rhetorical skill will remain valuable, the ability to prompt an AI in sophisticated ways, and using one's knowledge of rhetoric, history, and subject matter will be even more valuable. ... This is actually more beneficial for the humanities compared to people who just want to code. But even within the world of coding, I think that we're going to find that the irreplaceable level of sophistication of systems thinking and fundamental thinking in programming that will still remain very human, and it will be replaced as sort of the kind of code monkey turning out code stuff.

Ethan Xu