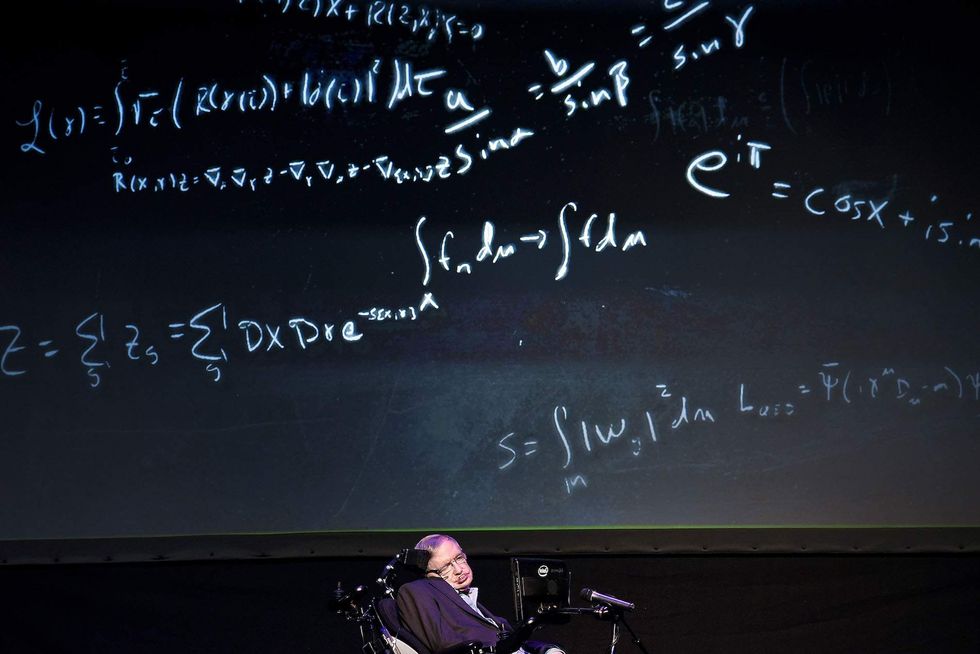

British theoretical physicist professor Stephen Hawking said that world government might be needed to save humanity from a robot holocaust. “The real risk with AI isn't malice but competence,” he said. “A super intelligent AI will be extremely good at accomplishing its goals, and if those goals aren't aligned with ours, we're in trouble." (Desiree Martin/AFP/Getty Images)